moabb.evaluations.base.BaseEvaluation#

- class moabb.evaluations.base.BaseEvaluation(paradigm, datasets=None, random_state=None, n_jobs=1, overwrite=False, error_score='raise', suffix='', hdf5_path=None, additional_columns=None, return_epochs=False, return_raws=False, mne_labels=False, n_splits=None, save_model=False, cache_config=None, optuna=False, time_out=900, verbose=None)[source]#

Base class that defines necessary operations for an evaluation. Evaluations determine what the train and test sets are and can implement additional data preprocessing steps for more complicated algorithms.

- Parameters:

paradigm (Paradigm instance) – The paradigm to use.

datasets (List of Dataset instance) – The list of dataset to run the evaluation. If none, the list of compatible dataset will be retrieved from the paradigm instance.

random_state (int, RandomState instance, default=None) – If not None, can guarantee same seed for shuffling examples.

n_jobs (int, default=1) – Number of jobs for fitting of pipeline.

overwrite (bool, default=False) – If true, overwrite the results.

error_score ("raise" or numeric, default="raise") – Value to assign to the score if an error occurs in estimator fitting. If set to ‘raise’, the error is raised.

suffix (str) – Suffix for the results file.

hdf5_path (str) – Specific path for storing the results.

additional_columns (None) – Adding information to results.

return_epochs (bool, default=False) – use MNE epoch to train pipelines.

return_raws (bool, default=False) – use MNE raw to train pipelines.

mne_labels (bool, default=False) – if returning MNE epoch, use original dataset label if True

n_splits (int, default=None) – Number of splits for cross-validation. If None, the number of splits is equal to the number of subjects.

save_model (bool, default=False) – Save model after training, for each fold of cross-validation if needed

cache_config (bool, default=None) – Configuration for caching of datasets. See

moabb.datasets.base.CacheConfigfor details.optuna (bool, default=False) – If optuna is enable it will change the GridSearch to a RandomizedGridSearch with 15 minutes of cut off time. This option is compatible with list of entries of type None, bool, int, float and string

time_out (default=60*15) – Cut off time for the optuna search expressed in seconds, the default value is 15 minutes. Only used with optuna equal to True.

verbose (bool, str, int, default=None) – If not None, override the default MOABB logging level used by this evaluation (see

moabb.utils.verbose()for more information on how this is handled). If used, it should be passed as a keyword-argument only.

Notes

Added in version 1.1.0: n_splits, save_model, cache_config parameters.

Added in version 1.1.1: optuna, time_out parameters.

Added in version 1.5: verbose parameter.

- abstract evaluate(dataset, pipelines, param_grid, process_pipeline, postprocess_pipeline=None)[source]#

Evaluate results on a single dataset.

This method return a generator. each results item is a dict with the following conversion:

res = {'time': Duration of the training , 'dataset': dataset id, 'subject': subject id, 'session': session id, 'score': score, 'n_samples': number of training examples, 'n_channels': number of channel, 'pipeline': pipeline name}

- abstract is_valid(dataset)[source]#

Verify the dataset is compatible with evaluation.

This method is called to verify dataset given in the constructor are compatible with the evaluation context.

This method should return false if the dataset does not match the evaluation. This is for example the case if the dataset does not contain enough session for a cross-session eval.

- Parameters:

dataset (dataset instance) – The dataset to verify.

- process(pipelines, param_grid=None, postprocess_pipeline=None)[source]#

Runs all pipelines on all datasets.

This function will apply all provided pipelines and return a dataframe containing the results of the evaluation.

- Parameters:

pipelines (dict of pipeline instance.) – A dict containing the sklearn pipeline to evaluate.

param_grid (dict of str) – The key of the dictionary must be the same as the associated pipeline.

postprocess_pipeline (Pipeline | None) – Optional pipeline to apply to the data after the preprocessing. This pipeline will either receive

mne.io.BaseRaw,mne.Epochsornp.ndarray()as input, depending on the values ofreturn_epochsandreturn_raws. This pipeline must return annp.ndarray. This pipeline must be “fixed” because it will not be trained, i.e. no call tofitwill be made.

- Returns:

results – A dataframe containing the results.

- Return type:

pd.DataFrame

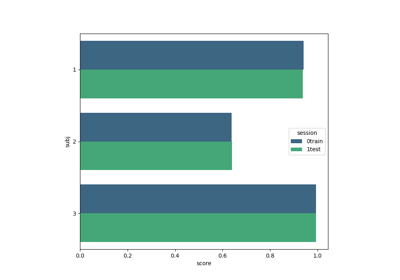

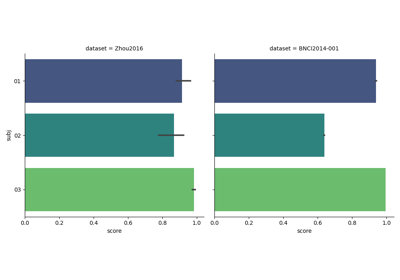

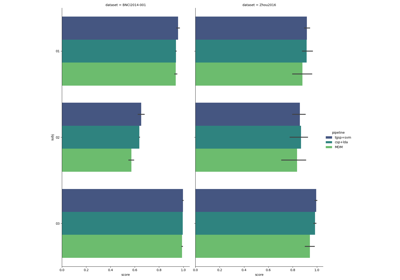

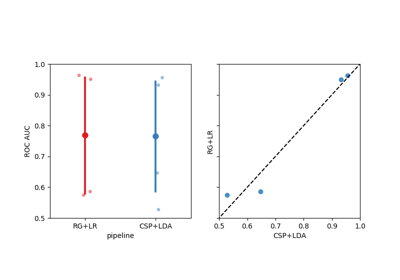

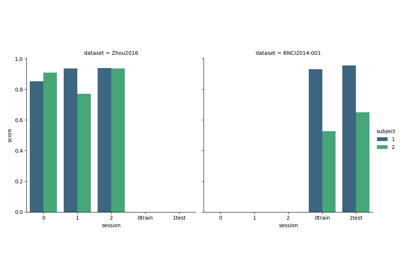

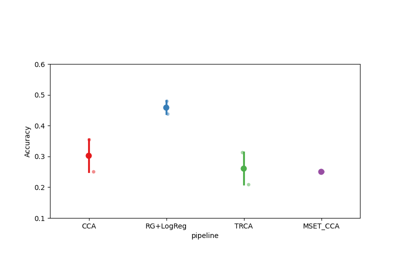

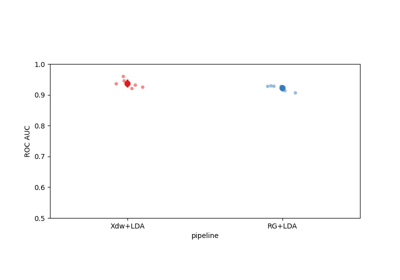

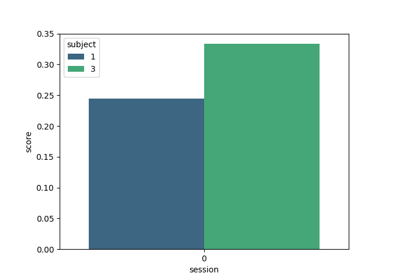

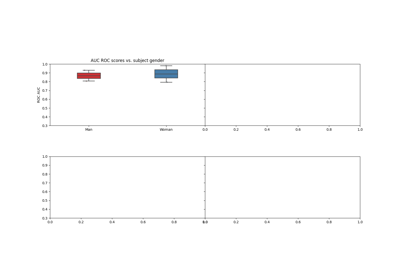

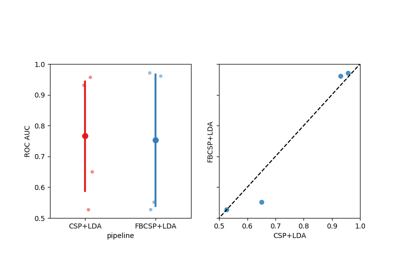

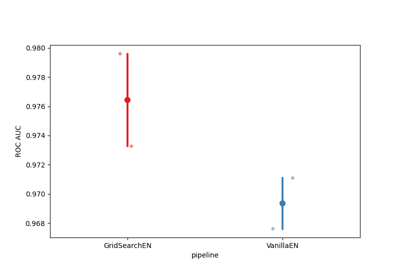

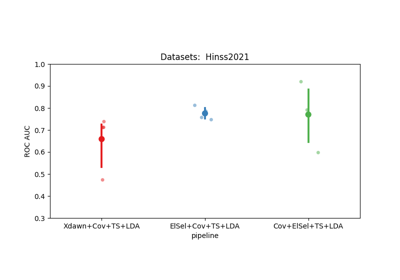

Examples using moabb.evaluations.base.BaseEvaluation#

Using X y data (epoched data) instead of continuous signal