Note

Click here to download the full example code

Benchmarking on MOABB with Braindecode (PyTorch) deep net architectures#

This example shows how to use MOABB to benchmark a set of Braindecode pipelines (deep learning architectures) on all available datasets. For this example, we will use only 2 datasets to keep the computation time low, but this benchmark is designed to easily scale to many datasets.

# Authors: Igor Carrara <igor.carrara@inria.fr>

# Bruno Aristimunha <b.aristimunha@gmail.com>

# Sylvain Chevallier <sylvain.chevallier@universite-paris-saclay.fr>

#

# License: BSD (3-clause)

import os

import matplotlib.pyplot as plt

import torch

from absl.logging import ERROR, set_verbosity

from moabb import benchmark, set_log_level

from moabb.analysis.plotting import score_plot

from moabb.datasets import BNCI2014_001, BNCI2014_004

from moabb.utils import setup_seed

set_log_level("info")

# Avoid output Warning

set_verbosity(ERROR)

os.environ["TF_CPP_MIN_LOG_LEVEL"] = "3"

# Print Information PyTorch

print(f"Torch Version: {torch.__version__}")

# Set up GPU if it is there

cuda = torch.cuda.is_available()

device = "cuda" if cuda else "cpu"

print("GPU is", "AVAILABLE" if cuda else "NOT AVAILABLE")

Torch Version: 1.13.1+cu117

GPU is NOT AVAILABLE

In this example, we will use only 2 subjects from the dataset BNCI2014_001 and BNCI2014_004.

Running the benchmark#

The benchmark is run using the benchmark function. You need to specify the

folder containing the pipelines, the kind of evaluation, and the paradigm

to use. By default, the benchmark will use all available datasets for all

paradigms listed in the pipelines. You could restrict to specific evaluation and

paradigm using the evaluations and paradigms arguments.

To save computation time, the results are cached. If you want to re-run the

benchmark, you can set the overwrite argument to True.

It is possible to indicate the folder to cache the results and the one to save

the analysis & figures. By default, the results are saved in the results

folder, and the analysis & figures are saved in the benchmark folder.

This code is implemented to run on CPU. If you’re using a GPU, do not use multithreading (i.e. set n_jobs=1)

In order to allow the benchmark function to work with return_epoch=True (Required to use Braindecode( we need to call each pipeline as “braindecode_xxx…”, with xxx the name of the model to be handled correctly by the benchmark function.

# Set up reproducibility of Tensorflow

setup_seed(42)

# Restrict this example only to the first two subjects of BNCI2014_001

dataset = BNCI2014_001()

dataset2 = BNCI2014_004()

dataset.subject_list = dataset.subject_list[:2]

dataset2.subject_list = dataset2.subject_list[:2]

datasets = [dataset, dataset2]

results = benchmark(

pipelines="./pipelines_braindecode",

evaluations=["CrossSession"],

paradigms=["LeftRightImagery"],

include_datasets=datasets,

results="./results/",

overwrite=False,

plot=False,

output="./benchmark/",

n_jobs=-1,

)

BNCI2014-001-CrossSession: 0%| | 0/2 [00:00<?, ?it/s]/home/runner/work/moabb/moabb/moabb/datasets/preprocessing.py:279: UserWarning: warnEpochs <Epochs | 24 events (all good), 2 – 6 s (baseline off), ~4.1 MB, data loaded,

'left_hand': 12

'right_hand': 12>

warn(f"warnEpochs {epochs}")

/home/runner/work/moabb/moabb/moabb/datasets/preprocessing.py:279: UserWarning: warnEpochs <Epochs | 24 events (all good), 2 – 6 s (baseline off), ~4.1 MB, data loaded,

'left_hand': 12

'right_hand': 12>

warn(f"warnEpochs {epochs}")

/home/runner/work/moabb/moabb/moabb/datasets/preprocessing.py:279: UserWarning: warnEpochs <Epochs | 24 events (all good), 2 – 6 s (baseline off), ~4.1 MB, data loaded,

'left_hand': 12

'right_hand': 12>

warn(f"warnEpochs {epochs}")

/home/runner/work/moabb/moabb/moabb/datasets/preprocessing.py:279: UserWarning: warnEpochs <Epochs | 24 events (all good), 2 – 6 s (baseline off), ~4.1 MB, data loaded,

'left_hand': 12

'right_hand': 12>

warn(f"warnEpochs {epochs}")

/home/runner/work/moabb/moabb/moabb/datasets/preprocessing.py:279: UserWarning: warnEpochs <Epochs | 24 events (all good), 2 – 6 s (baseline off), ~4.1 MB, data loaded,

'left_hand': 12

'right_hand': 12>

warn(f"warnEpochs {epochs}")

/home/runner/work/moabb/moabb/moabb/datasets/preprocessing.py:279: UserWarning: warnEpochs <Epochs | 24 events (all good), 2 – 6 s (baseline off), ~4.1 MB, data loaded,

'left_hand': 12

'right_hand': 12>

warn(f"warnEpochs {epochs}")

/home/runner/work/moabb/moabb/moabb/datasets/preprocessing.py:279: UserWarning: warnEpochs <Epochs | 24 events (all good), 2 – 6 s (baseline off), ~4.1 MB, data loaded,

'left_hand': 12

'right_hand': 12>

warn(f"warnEpochs {epochs}")

/home/runner/work/moabb/moabb/moabb/datasets/preprocessing.py:279: UserWarning: warnEpochs <Epochs | 24 events (all good), 2 – 6 s (baseline off), ~4.1 MB, data loaded,

'left_hand': 12

'right_hand': 12>

warn(f"warnEpochs {epochs}")

/home/runner/work/moabb/moabb/moabb/datasets/preprocessing.py:279: UserWarning: warnEpochs <Epochs | 24 events (all good), 2 – 6 s (baseline off), ~4.1 MB, data loaded,

'left_hand': 12

'right_hand': 12>

warn(f"warnEpochs {epochs}")

/home/runner/work/moabb/moabb/moabb/datasets/preprocessing.py:279: UserWarning: warnEpochs <Epochs | 24 events (all good), 2 – 6 s (baseline off), ~4.1 MB, data loaded,

'left_hand': 12

'right_hand': 12>

warn(f"warnEpochs {epochs}")

/home/runner/work/moabb/moabb/moabb/datasets/preprocessing.py:279: UserWarning: warnEpochs <Epochs | 24 events (all good), 2 – 6 s (baseline off), ~4.1 MB, data loaded,

'left_hand': 12

'right_hand': 12>

warn(f"warnEpochs {epochs}")

/home/runner/work/moabb/moabb/moabb/datasets/preprocessing.py:279: UserWarning: warnEpochs <Epochs | 24 events (all good), 2 – 6 s (baseline off), ~4.1 MB, data loaded,

'left_hand': 12

'right_hand': 12>

warn(f"warnEpochs {epochs}")

/home/runner/work/moabb/moabb/.venv/lib/python3.9/site-packages/braindecode/models/base.py:180: UserWarning: LogSoftmax final layer will be removed! Please adjust your loss function accordingly (e.g. CrossEntropyLoss)!

warnings.warn("LogSoftmax final layer will be removed! " +

epoch train_acc train_loss valid_acc valid_loss dur

------- ----------- ------------ ----------- ------------ ------

1 0.4219 1.1057 0.4483 0.8093 0.3210

2 0.4844 0.7672 0.4483 0.8043 0.2810

3 0.4531 1.0164 0.4483 0.7982 0.3089

4 0.5156 0.8216 0.4483 0.7914 0.2796

5 0.5312 0.7809 0.4483 0.7834 0.2792

/home/runner/work/moabb/moabb/.venv/lib/python3.9/site-packages/braindecode/models/base.py:180: UserWarning: LogSoftmax final layer will be removed! Please adjust your loss function accordingly (e.g. CrossEntropyLoss)!

warnings.warn("LogSoftmax final layer will be removed! " +

epoch train_acc train_loss valid_acc valid_loss dur

------- ----------- ------------ ----------- ------------ ------

1 0.3906 1.0376 0.4828 0.9379 0.3297

2 0.5469 0.8678 0.4828 0.9140 0.2792

3 0.5156 0.7991 0.4828 0.8924 0.2787

4 0.5000 0.8015 0.4483 0.8746 0.2875

5 0.5781 0.6971 0.4483 0.8572 0.2876

epoch train_acc train_loss valid_acc valid_loss dur

------- ----------- ------------ ----------- ------------ ------

1 0.5625 0.6802 0.4828 0.6926 0.1560

2 0.4688 0.7433 0.4828 0.6927 0.1042

3 0.5000 0.7208 0.4828 0.6928 0.1026

Stopping since valid_loss has not improved in the last 3 epochs.

epoch train_acc train_loss valid_acc valid_loss dur

------- ----------- ------------ ----------- ------------ ------

1 0.5625 0.6887 0.4483 0.7004 0.1045

2 0.5781 0.7029 0.4483 0.7003 0.1035

3 0.5938 0.6907 0.4483 0.7002 0.1031

4 0.5312 0.6996 0.4483 0.7001 0.1028

5 0.5312 0.6758 0.4483 0.7000 0.1032

6 0.5469 0.7148 0.4483 0.6999 0.1040

7 0.5625 0.6850 0.4483 0.6998 0.1397

8 0.5469 0.6997 0.4828 0.6998 0.1188

9 0.5000 0.6883 0.4483 0.6997 0.1016

10 0.5312 0.7058 0.4483 0.6997 0.1013

/home/runner/work/moabb/moabb/.venv/lib/python3.9/site-packages/braindecode/models/base.py:180: UserWarning: LogSoftmax final layer will be removed! Please adjust your loss function accordingly (e.g. CrossEntropyLoss)!

warnings.warn("LogSoftmax final layer will be removed! " +

/home/runner/work/moabb/moabb/.venv/lib/python3.9/site-packages/torch/nn/modules/conv.py:459: UserWarning: Using padding='same' with even kernel lengths and odd dilation may require a zero-padded copy of the input be created (Triggered internally at ../aten/src/ATen/native/Convolution.cpp:895.)

return F.conv2d(input, weight, bias, self.stride,

epoch train_acc train_loss valid_acc valid_loss dur

------- ----------- ------------ ----------- ------------ ------

1 0.5156 1.0825 0.4483 0.6958 0.5901

2 0.4062 0.9912 0.4483 0.6960 0.6174

3 0.4688 1.0518 0.4483 0.6962 0.5681

Stopping since valid_loss has not improved in the last 3 epochs.

/home/runner/work/moabb/moabb/.venv/lib/python3.9/site-packages/braindecode/models/base.py:180: UserWarning: LogSoftmax final layer will be removed! Please adjust your loss function accordingly (e.g. CrossEntropyLoss)!

warnings.warn("LogSoftmax final layer will be removed! " +

epoch train_acc train_loss valid_acc valid_loss dur

------- ----------- ------------ ----------- ------------ ------

1 0.5156 0.9333 0.5172 0.6946 0.5958

2 0.4531 0.9715 0.5172 0.6946 0.5662

3 0.6250 0.7161 0.5172 0.6947 0.6047

Stopping since valid_loss has not improved in the last 3 epochs.

BNCI2014-001-CrossSession: 50%|##### | 1/2 [00:16<00:16, 16.80s/it]/home/runner/work/moabb/moabb/moabb/datasets/preprocessing.py:279: UserWarning: warnEpochs <Epochs | 24 events (all good), 2 – 6 s (baseline off), ~4.1 MB, data loaded,

'left_hand': 12

'right_hand': 12>

warn(f"warnEpochs {epochs}")

/home/runner/work/moabb/moabb/moabb/datasets/preprocessing.py:279: UserWarning: warnEpochs <Epochs | 24 events (all good), 2 – 6 s (baseline off), ~4.1 MB, data loaded,

'left_hand': 12

'right_hand': 12>

warn(f"warnEpochs {epochs}")

/home/runner/work/moabb/moabb/moabb/datasets/preprocessing.py:279: UserWarning: warnEpochs <Epochs | 24 events (all good), 2 – 6 s (baseline off), ~4.1 MB, data loaded,

'left_hand': 12

'right_hand': 12>

warn(f"warnEpochs {epochs}")

/home/runner/work/moabb/moabb/moabb/datasets/preprocessing.py:279: UserWarning: warnEpochs <Epochs | 24 events (all good), 2 – 6 s (baseline off), ~4.1 MB, data loaded,

'left_hand': 12

'right_hand': 12>

warn(f"warnEpochs {epochs}")

/home/runner/work/moabb/moabb/moabb/datasets/preprocessing.py:279: UserWarning: warnEpochs <Epochs | 24 events (all good), 2 – 6 s (baseline off), ~4.1 MB, data loaded,

'left_hand': 12

'right_hand': 12>

warn(f"warnEpochs {epochs}")

/home/runner/work/moabb/moabb/moabb/datasets/preprocessing.py:279: UserWarning: warnEpochs <Epochs | 24 events (all good), 2 – 6 s (baseline off), ~4.1 MB, data loaded,

'left_hand': 12

'right_hand': 12>

warn(f"warnEpochs {epochs}")

/home/runner/work/moabb/moabb/moabb/datasets/preprocessing.py:279: UserWarning: warnEpochs <Epochs | 24 events (all good), 2 – 6 s (baseline off), ~4.1 MB, data loaded,

'left_hand': 12

'right_hand': 12>

warn(f"warnEpochs {epochs}")

/home/runner/work/moabb/moabb/moabb/datasets/preprocessing.py:279: UserWarning: warnEpochs <Epochs | 24 events (all good), 2 – 6 s (baseline off), ~4.1 MB, data loaded,

'left_hand': 12

'right_hand': 12>

warn(f"warnEpochs {epochs}")

/home/runner/work/moabb/moabb/moabb/datasets/preprocessing.py:279: UserWarning: warnEpochs <Epochs | 24 events (all good), 2 – 6 s (baseline off), ~4.1 MB, data loaded,

'left_hand': 12

'right_hand': 12>

warn(f"warnEpochs {epochs}")

/home/runner/work/moabb/moabb/moabb/datasets/preprocessing.py:279: UserWarning: warnEpochs <Epochs | 24 events (all good), 2 – 6 s (baseline off), ~4.1 MB, data loaded,

'left_hand': 12

'right_hand': 12>

warn(f"warnEpochs {epochs}")

/home/runner/work/moabb/moabb/moabb/datasets/preprocessing.py:279: UserWarning: warnEpochs <Epochs | 24 events (all good), 2 – 6 s (baseline off), ~4.1 MB, data loaded,

'left_hand': 12

'right_hand': 12>

warn(f"warnEpochs {epochs}")

/home/runner/work/moabb/moabb/moabb/datasets/preprocessing.py:279: UserWarning: warnEpochs <Epochs | 24 events (all good), 2 – 6 s (baseline off), ~4.1 MB, data loaded,

'left_hand': 12

'right_hand': 12>

warn(f"warnEpochs {epochs}")

/home/runner/work/moabb/moabb/.venv/lib/python3.9/site-packages/braindecode/models/base.py:180: UserWarning: LogSoftmax final layer will be removed! Please adjust your loss function accordingly (e.g. CrossEntropyLoss)!

warnings.warn("LogSoftmax final layer will be removed! " +

epoch train_acc train_loss valid_acc valid_loss dur

------- ----------- ------------ ----------- ------------ ------

1 0.5156 1.3883 0.5172 1.4938 0.2817

2 0.5312 1.3381 0.4828 1.2332 0.3072

3 0.4219 1.7567 0.4483 1.0417 0.2799

4 0.4062 1.3712 0.4483 0.8965 0.2785

5 0.5156 1.2690 0.4138 0.8003 0.2806

/home/runner/work/moabb/moabb/.venv/lib/python3.9/site-packages/braindecode/models/base.py:180: UserWarning: LogSoftmax final layer will be removed! Please adjust your loss function accordingly (e.g. CrossEntropyLoss)!

warnings.warn("LogSoftmax final layer will be removed! " +

epoch train_acc train_loss valid_acc valid_loss dur

------- ----------- ------------ ----------- ------------ ------

1 0.4531 0.9311 0.5862 0.7616 0.2800

2 0.4688 1.1119 0.5862 0.7374 0.2793

3 0.5156 1.0635 0.5862 0.7240 0.2792

4 0.5312 0.9501 0.5862 0.7134 0.3154

5 0.5625 1.1596 0.5517 0.7047 0.2802

epoch train_acc train_loss valid_acc valid_loss dur

------- ----------- ------------ ----------- ------------ ------

1 0.4844 0.7917 0.5172 0.7080 0.0911

2 0.5312 0.7770 0.5172 0.7055 0.0907

3 0.5781 0.6989 0.5172 0.7030 0.0895

4 0.4688 0.7869 0.5172 0.7010 0.0900

5 0.5312 0.7898 0.5172 0.6996 0.0907

6 0.4688 0.7722 0.5172 0.6986 0.0901

7 0.6250 0.7083 0.5172 0.6977 0.0897

8 0.4688 0.7945 0.5172 0.6970 0.0901

9 0.4375 0.7666 0.5172 0.6963 0.0901

10 0.4844 0.7699 0.5172 0.6958 0.0902

epoch train_acc train_loss valid_acc valid_loss dur

------- ----------- ------------ ----------- ------------ ------

1 0.5625 0.6843 0.5862 0.6885 0.0928

2 0.6719 0.6603 0.5517 0.6886 0.1259

3 0.5781 0.6838 0.5517 0.6887 0.0899

Stopping since valid_loss has not improved in the last 3 epochs.

/home/runner/work/moabb/moabb/.venv/lib/python3.9/site-packages/braindecode/models/base.py:180: UserWarning: LogSoftmax final layer will be removed! Please adjust your loss function accordingly (e.g. CrossEntropyLoss)!

warnings.warn("LogSoftmax final layer will be removed! " +

epoch train_acc train_loss valid_acc valid_loss dur

------- ----------- ------------ ----------- ------------ ------

1 0.5000 1.0273 0.4828 0.9603 0.5795

2 0.5938 0.7457 0.4828 0.9244 0.6128

3 0.4844 1.0134 0.4828 0.8896 0.5672

4 0.5781 0.8293 0.4828 0.8609 0.5840

5 0.4688 1.0145 0.4828 0.8388 0.5618

6 0.5312 1.0438 0.4828 0.8229 0.5770

7 0.4531 1.0184 0.4828 0.8080 0.5625

8 0.5625 0.8524 0.4828 0.7972 0.5802

9 0.4844 1.1351 0.4828 0.7869 0.5910

10 0.4062 1.1848 0.4828 0.7786 0.5821

/home/runner/work/moabb/moabb/.venv/lib/python3.9/site-packages/braindecode/models/base.py:180: UserWarning: LogSoftmax final layer will be removed! Please adjust your loss function accordingly (e.g. CrossEntropyLoss)!

warnings.warn("LogSoftmax final layer will be removed! " +

epoch train_acc train_loss valid_acc valid_loss dur

------- ----------- ------------ ----------- ------------ ------

1 0.4531 0.9541 0.4828 0.8511 0.6006

2 0.4844 0.8626 0.4828 0.8526 0.5634

3 0.5156 0.8936 0.4828 0.8538 0.5859

Stopping since valid_loss has not improved in the last 3 epochs.

BNCI2014-001-CrossSession: 100%|##########| 2/2 [00:36<00:00, 18.50s/it]

BNCI2014-001-CrossSession: 100%|##########| 2/2 [00:36<00:00, 18.25s/it]

BNCI2014-004-CrossSession: 0%| | 0/2 [00:00<?, ?it/s]/home/runner/work/moabb/moabb/.venv/lib/python3.9/site-packages/urllib3/connectionpool.py:1064: InsecureRequestWarning: Unverified HTTPS request is being made to host 'lampx.tugraz.at'. Adding certificate verification is strongly advised. See: https://urllib3.readthedocs.io/en/1.26.x/advanced-usage.html#ssl-warnings

warnings.warn(

0%| | 0.00/34.2M [00:00<?, ?B/s]

0%| | 8.19k/34.2M [00:00<08:26, 67.5kB/s]

0%| | 32.8k/34.2M [00:00<03:54, 146kB/s]

0%| | 96.3k/34.2M [00:00<01:48, 316kB/s]

1%|▏ | 209k/34.2M [00:00<01:01, 554kB/s]

1%|▍ | 432k/34.2M [00:00<00:33, 1.01MB/s]

3%|▉ | 889k/34.2M [00:00<00:17, 1.93MB/s]

5%|█▉ | 1.79M/34.2M [00:00<00:08, 3.71MB/s]

11%|███▉ | 3.61M/34.2M [00:00<00:04, 7.24MB/s]

20%|███████▎ | 6.73M/34.2M [00:01<00:02, 12.9MB/s]

29%|██████████▌ | 9.79M/34.2M [00:01<00:01, 16.6MB/s]

38%|█████████████▉ | 12.9M/34.2M [00:01<00:01, 19.1MB/s]

46%|████████████████▉ | 15.6M/34.2M [00:01<00:00, 20.2MB/s]

54%|████████████████████ | 18.6M/34.2M [00:01<00:00, 21.3MB/s]

63%|███████████████████████▍ | 21.6M/34.2M [00:01<00:00, 22.4MB/s]

72%|██████████████████████████▊ | 24.8M/34.2M [00:01<00:00, 23.4MB/s]

81%|█████████████████████████████▉ | 27.7M/34.2M [00:01<00:00, 23.4MB/s]

90%|█████████████████████████████████▏ | 30.7M/34.2M [00:02<00:00, 23.8MB/s]

99%|████████████████████████████████████▍| 33.7M/34.2M [00:02<00:00, 23.9MB/s]

0%| | 0.00/34.2M [00:00<?, ?B/s]

100%|██████████████████████████████████████| 34.2M/34.2M [00:00<00:00, 120GB/s]

/home/runner/work/moabb/moabb/.venv/lib/python3.9/site-packages/urllib3/connectionpool.py:1064: InsecureRequestWarning: Unverified HTTPS request is being made to host 'lampx.tugraz.at'. Adding certificate verification is strongly advised. See: https://urllib3.readthedocs.io/en/1.26.x/advanced-usage.html#ssl-warnings

warnings.warn(

0%| | 0.00/18.6M [00:00<?, ?B/s]

0%| | 8.19k/18.6M [00:00<04:36, 67.3kB/s]

0%| | 32.8k/18.6M [00:00<02:07, 145kB/s]

1%|▏ | 96.3k/18.6M [00:00<00:58, 315kB/s]

1%|▍ | 209k/18.6M [00:00<00:33, 551kB/s]

2%|▉ | 432k/18.6M [00:00<00:18, 1.01MB/s]

5%|█▊ | 889k/18.6M [00:00<00:09, 1.93MB/s]

10%|███▌ | 1.80M/18.6M [00:00<00:04, 3.72MB/s]

19%|███████▏ | 3.62M/18.6M [00:00<00:02, 7.26MB/s]

36%|█████████████▎ | 6.72M/18.6M [00:01<00:00, 12.9MB/s]

51%|██████████████████▉ | 9.49M/18.6M [00:01<00:00, 15.8MB/s]

65%|████████████████████████▏ | 12.1M/18.6M [00:01<00:00, 17.6MB/s]

81%|█████████████████████████████▊ | 15.0M/18.6M [00:01<00:00, 19.3MB/s]

97%|███████████████████████████████████▊ | 18.0M/18.6M [00:01<00:00, 20.9MB/s]

0%| | 0.00/18.6M [00:00<?, ?B/s]

100%|█████████████████████████████████████| 18.6M/18.6M [00:00<00:00, 79.4GB/s]

/home/runner/work/moabb/moabb/moabb/datasets/preprocessing.py:279: UserWarning: warnEpochs <Epochs | 120 events (all good), 3 – 7.5 s (baseline off), ~3.1 MB, data loaded,

'left_hand': 60

'right_hand': 60>

warn(f"warnEpochs {epochs}")

/home/runner/work/moabb/moabb/moabb/datasets/preprocessing.py:279: UserWarning: warnEpochs <Epochs | 120 events (all good), 3 – 7.5 s (baseline off), ~3.1 MB, data loaded,

'left_hand': 60

'right_hand': 60>

warn(f"warnEpochs {epochs}")

/home/runner/work/moabb/moabb/moabb/datasets/preprocessing.py:279: UserWarning: warnEpochs <Epochs | 160 events (all good), 3 – 7.5 s (baseline off), ~4.1 MB, data loaded,

'left_hand': 80

'right_hand': 80>

warn(f"warnEpochs {epochs}")

/home/runner/work/moabb/moabb/moabb/datasets/preprocessing.py:279: UserWarning: warnEpochs <Epochs | 160 events (all good), 3 – 7.5 s (baseline off), ~4.1 MB, data loaded,

'left_hand': 80

'right_hand': 80>

warn(f"warnEpochs {epochs}")

/home/runner/work/moabb/moabb/moabb/datasets/preprocessing.py:279: UserWarning: warnEpochs <Epochs | 160 events (all good), 3 – 7.5 s (baseline off), ~4.1 MB, data loaded,

'left_hand': 80

'right_hand': 80>

warn(f"warnEpochs {epochs}")

/home/runner/work/moabb/moabb/.venv/lib/python3.9/site-packages/braindecode/models/base.py:180: UserWarning: LogSoftmax final layer will be removed! Please adjust your loss function accordingly (e.g. CrossEntropyLoss)!

warnings.warn("LogSoftmax final layer will be removed! " +

epoch train_acc train_loss valid_acc valid_loss dur

------- ----------- ------------ ----------- ------------ ------

1 0.4888 0.9723 0.5000 1.2424 0.8023

2 0.4732 0.9305 0.4917 0.8491 0.7909

3 0.5402 0.8306 0.4750 0.8782 0.7986

4 0.5268 0.7983 0.4750 0.8321 0.7882

5 0.5692 0.8193 0.5417 0.7775 0.7823

/home/runner/work/moabb/moabb/.venv/lib/python3.9/site-packages/braindecode/models/base.py:180: UserWarning: LogSoftmax final layer will be removed! Please adjust your loss function accordingly (e.g. CrossEntropyLoss)!

warnings.warn("LogSoftmax final layer will be removed! " +

epoch train_acc train_loss valid_acc valid_loss dur

------- ----------- ------------ ----------- ------------ ------

1 0.4531 0.9950 0.4583 0.7885 0.7921

2 0.5491 0.8640 0.4917 0.7651 0.7812

3 0.5513 0.8236 0.5583 0.7418 0.7511

4 0.5759 0.7701 0.5917 0.7257 0.7950

5 0.5759 0.7864 0.6000 0.7143 0.7841

/home/runner/work/moabb/moabb/.venv/lib/python3.9/site-packages/braindecode/models/base.py:180: UserWarning: LogSoftmax final layer will be removed! Please adjust your loss function accordingly (e.g. CrossEntropyLoss)!

warnings.warn("LogSoftmax final layer will be removed! " +

epoch train_acc train_loss valid_acc valid_loss dur

------- ----------- ------------ ----------- ------------ ------

1 0.5067 0.9092 0.5446 0.7741 0.7980

2 0.5089 0.9135 0.5268 0.7341 0.7523

3 0.5134 0.8492 0.5536 0.7097 0.7782

4 0.5692 0.7980 0.5357 0.6884 0.7844

5 0.6183 0.7254 0.6071 0.6660 0.7502

/home/runner/work/moabb/moabb/.venv/lib/python3.9/site-packages/braindecode/models/base.py:180: UserWarning: LogSoftmax final layer will be removed! Please adjust your loss function accordingly (e.g. CrossEntropyLoss)!

warnings.warn("LogSoftmax final layer will be removed! " +

epoch train_acc train_loss valid_acc valid_loss dur

------- ----------- ------------ ----------- ------------ ------

1 0.5067 0.8808 0.5000 0.8226 0.7627

2 0.5223 0.8515 0.5625 0.7452 0.7916

3 0.5446 0.8533 0.5893 0.7133 0.7949

4 0.5714 0.7774 0.6071 0.6870 0.7560

5 0.5647 0.7651 0.6161 0.6625 0.7834

/home/runner/work/moabb/moabb/.venv/lib/python3.9/site-packages/braindecode/models/base.py:180: UserWarning: LogSoftmax final layer will be removed! Please adjust your loss function accordingly (e.g. CrossEntropyLoss)!

warnings.warn("LogSoftmax final layer will be removed! " +

epoch train_acc train_loss valid_acc valid_loss dur

------- ----------- ------------ ----------- ------------ ------

1 0.4688 0.9521 0.5268 0.8605 0.7909

2 0.5223 0.9419 0.5446 0.8796 0.7789

3 0.5223 0.9198 0.6161 0.6962 0.7489

4 0.5982 0.7665 0.6607 0.6312 0.7878

5 0.5915 0.7418 0.6607 0.6130 0.7822

epoch train_acc train_loss valid_acc valid_loss dur

------- ----------- ------------ ----------- ------------ ------

1 0.4955 0.7649 0.4500 0.6942 0.2045

2 0.5112 0.7700 0.4333 0.6943 0.1986

3 0.4978 0.7441 0.4500 0.6944 0.2250

Stopping since valid_loss has not improved in the last 3 epochs.

epoch train_acc train_loss valid_acc valid_loss dur

------- ----------- ------------ ----------- ------------ ------

1 0.4799 0.7453 0.4167 0.6940 0.1960

2 0.4888 0.7302 0.4417 0.6941 0.2304

3 0.4911 0.7319 0.4583 0.6941 0.1963

Stopping since valid_loss has not improved in the last 3 epochs.

epoch train_acc train_loss valid_acc valid_loss dur

------- ----------- ------------ ----------- ------------ ------

1 0.4732 0.7531 0.4911 0.6932 0.1950

2 0.5067 0.7178 0.4911 0.6932 0.2218

3 0.5022 0.7261 0.5089 0.6932 0.1969

Stopping since valid_loss has not improved in the last 3 epochs.

epoch train_acc train_loss valid_acc valid_loss dur

------- ----------- ------------ ----------- ------------ ------

1 0.4665 0.7986 0.5625 0.6932 0.2392

2 0.4821 0.7955 0.5446 0.6931 0.2068

3 0.4821 0.7672 0.4911 0.6930 0.1959

4 0.4732 0.7927 0.4911 0.6929 0.1926

5 0.4777 0.7656 0.5000 0.6928 0.1944

6 0.5022 0.7429 0.5000 0.6926 0.2107

7 0.4777 0.7642 0.4911 0.6925 0.2099

8 0.5246 0.7434 0.5000 0.6922 0.1939

9 0.5335 0.7205 0.5179 0.6920 0.1954

10 0.5312 0.7337 0.5179 0.6917 0.1924

epoch train_acc train_loss valid_acc valid_loss dur

------- ----------- ------------ ----------- ------------ ------

1 0.5268 0.7237 0.5179 0.6929 0.2039

2 0.5156 0.7170 0.5268 0.6929 0.2116

3 0.5179 0.7244 0.5000 0.6929 0.1932

Stopping since valid_loss has not improved in the last 3 epochs.

/home/runner/work/moabb/moabb/.venv/lib/python3.9/site-packages/braindecode/models/base.py:180: UserWarning: LogSoftmax final layer will be removed! Please adjust your loss function accordingly (e.g. CrossEntropyLoss)!

warnings.warn("LogSoftmax final layer will be removed! " +

epoch train_acc train_loss valid_acc valid_loss dur

------- ----------- ------------ ----------- ------------ ------

1 0.5134 0.8990 0.5000 0.6910 1.0897

2 0.5223 0.8714 0.5000 0.6903 1.0856

3 0.5112 0.9039 0.4917 0.6894 1.0866

4 0.5424 0.8663 0.4917 0.6885 1.0982

5 0.5045 0.8688 0.4917 0.6875 1.0965

6 0.5246 0.8604 0.5000 0.6863 1.0780

7 0.5045 0.8932 0.5000 0.6849 1.0917

8 0.5335 0.8598 0.5000 0.6834 1.0817

9 0.5424 0.8327 0.5250 0.6816 1.0835

10 0.5022 0.8716 0.5333 0.6800 1.0903

/home/runner/work/moabb/moabb/.venv/lib/python3.9/site-packages/braindecode/models/base.py:180: UserWarning: LogSoftmax final layer will be removed! Please adjust your loss function accordingly (e.g. CrossEntropyLoss)!

warnings.warn("LogSoftmax final layer will be removed! " +

epoch train_acc train_loss valid_acc valid_loss dur

------- ----------- ------------ ----------- ------------ ------

1 0.5335 0.9087 0.4750 0.6933 1.0935

2 0.5000 0.9112 0.5250 0.6945 1.0782

3 0.5089 0.9152 0.5167 0.6982 1.0718

Stopping since valid_loss has not improved in the last 3 epochs.

/home/runner/work/moabb/moabb/.venv/lib/python3.9/site-packages/braindecode/models/base.py:180: UserWarning: LogSoftmax final layer will be removed! Please adjust your loss function accordingly (e.g. CrossEntropyLoss)!

warnings.warn("LogSoftmax final layer will be removed! " +

epoch train_acc train_loss valid_acc valid_loss dur

------- ----------- ------------ ----------- ------------ ------

1 0.5067 0.9704 0.4732 0.6966 1.0916

2 0.4821 1.0069 0.4732 0.6959 1.0918

3 0.4821 0.9971 0.5000 0.6952 1.0824

4 0.4911 0.9896 0.5089 0.6945 1.0877

5 0.4955 1.0036 0.5357 0.6939 1.0710

6 0.5089 0.9354 0.5446 0.6933 1.0838

7 0.5246 0.9662 0.5625 0.6928 1.0783

8 0.5022 0.9581 0.5714 0.6922 1.0715

9 0.5045 0.9717 0.5893 0.6918 1.0779

10 0.5022 0.9458 0.5982 0.6912 1.0719

/home/runner/work/moabb/moabb/.venv/lib/python3.9/site-packages/braindecode/models/base.py:180: UserWarning: LogSoftmax final layer will be removed! Please adjust your loss function accordingly (e.g. CrossEntropyLoss)!

warnings.warn("LogSoftmax final layer will be removed! " +

epoch train_acc train_loss valid_acc valid_loss dur

------- ----------- ------------ ----------- ------------ ------

1 0.5379 0.8689 0.5000 0.6898 1.0784

2 0.5201 0.9058 0.5804 0.6827 1.0894

3 0.4531 0.9623 0.6071 0.6776 1.0918

4 0.5379 0.8689 0.5714 0.6736 1.0748

5 0.5402 0.8560 0.5804 0.6705 1.0710

6 0.4978 0.8994 0.6161 0.6680 1.0734

7 0.4844 0.9570 0.6161 0.6657 1.0907

8 0.5312 0.8470 0.5982 0.6638 1.0814

9 0.5134 0.8640 0.5804 0.6622 1.0914

10 0.5402 0.8632 0.5804 0.6608 1.0703

/home/runner/work/moabb/moabb/.venv/lib/python3.9/site-packages/braindecode/models/base.py:180: UserWarning: LogSoftmax final layer will be removed! Please adjust your loss function accordingly (e.g. CrossEntropyLoss)!

warnings.warn("LogSoftmax final layer will be removed! " +

epoch train_acc train_loss valid_acc valid_loss dur

------- ----------- ------------ ----------- ------------ ------

1 0.5067 0.9206 0.5000 0.6990 1.0943

2 0.5067 0.9286 0.5179 0.6986 1.0932

3 0.5179 0.8948 0.4911 0.6984 1.0869

4 0.4821 0.9689 0.5179 0.6984 1.0808

5 0.4911 0.9309 0.4375 0.6985 1.0675

Stopping since valid_loss has not improved in the last 3 epochs.

BNCI2014-004-CrossSession: 50%|##### | 1/2 [01:20<01:20, 80.53s/it]/home/runner/work/moabb/moabb/.venv/lib/python3.9/site-packages/urllib3/connectionpool.py:1064: InsecureRequestWarning: Unverified HTTPS request is being made to host 'lampx.tugraz.at'. Adding certificate verification is strongly advised. See: https://urllib3.readthedocs.io/en/1.26.x/advanced-usage.html#ssl-warnings

warnings.warn(

0%| | 0.00/33.1M [00:00<?, ?B/s]

0%| | 8.19k/33.1M [00:00<08:06, 67.9kB/s]

0%| | 32.8k/33.1M [00:00<03:45, 146kB/s]

0%| | 88.1k/33.1M [00:00<01:55, 286kB/s]

1%|▏ | 201k/33.1M [00:00<01:01, 536kB/s]

1%|▌ | 424k/33.1M [00:00<00:32, 999kB/s]

3%|▉ | 864k/33.1M [00:00<00:17, 1.88MB/s]

5%|█▉ | 1.75M/33.1M [00:00<00:08, 3.63MB/s]

11%|███▉ | 3.53M/33.1M [00:00<00:04, 7.08MB/s]

20%|███████▎ | 6.52M/33.1M [00:01<00:02, 12.4MB/s]

29%|██████████▋ | 9.58M/33.1M [00:01<00:01, 16.2MB/s]

38%|██████████████ | 12.6M/33.1M [00:01<00:01, 18.7MB/s]

47%|█████████████████▏ | 15.4M/33.1M [00:01<00:00, 20.0MB/s]

56%|████████████████████▌ | 18.4M/33.1M [00:01<00:00, 21.3MB/s]

65%|████████████████████████ | 21.5M/33.1M [00:01<00:00, 22.4MB/s]

74%|███████████████████████████▎ | 24.4M/33.1M [00:01<00:00, 22.8MB/s]

83%|██████████████████████████████▌ | 27.3M/33.1M [00:01<00:00, 23.1MB/s]

92%|██████████████████████████████████ | 30.4M/33.1M [00:02<00:00, 23.7MB/s]

0%| | 0.00/33.1M [00:00<?, ?B/s]

100%|█████████████████████████████████████| 33.1M/33.1M [00:00<00:00, 76.7GB/s]

/home/runner/work/moabb/moabb/.venv/lib/python3.9/site-packages/urllib3/connectionpool.py:1064: InsecureRequestWarning: Unverified HTTPS request is being made to host 'lampx.tugraz.at'. Adding certificate verification is strongly advised. See: https://urllib3.readthedocs.io/en/1.26.x/advanced-usage.html#ssl-warnings

warnings.warn(

0%| | 0.00/16.5M [00:00<?, ?B/s]

0%| | 8.19k/16.5M [00:00<04:07, 66.8kB/s]

0%| | 32.8k/16.5M [00:00<01:53, 145kB/s]

1%|▏ | 96.3k/16.5M [00:00<00:52, 315kB/s]

1%|▍ | 209k/16.5M [00:00<00:29, 552kB/s]

3%|▉ | 432k/16.5M [00:00<00:15, 1.01MB/s]

5%|██ | 889k/16.5M [00:00<00:08, 1.93MB/s]

11%|████ | 1.80M/16.5M [00:00<00:03, 3.72MB/s]

22%|████████ | 3.62M/16.5M [00:00<00:01, 7.26MB/s]

40%|██████████████▊ | 6.63M/16.5M [00:01<00:00, 12.6MB/s]

58%|█████████████████████▌ | 9.65M/16.5M [00:01<00:00, 16.1MB/s]

76%|████████████████████████████ | 12.6M/16.5M [00:01<00:00, 18.5MB/s]

93%|██████████████████████████████████▌ | 15.4M/16.5M [00:01<00:00, 20.0MB/s]

0%| | 0.00/16.5M [00:00<?, ?B/s]

100%|█████████████████████████████████████| 16.5M/16.5M [00:00<00:00, 61.2GB/s]

/home/runner/work/moabb/moabb/moabb/datasets/preprocessing.py:279: UserWarning: warnEpochs <Epochs | 120 events (all good), 3 – 7.5 s (baseline off), ~3.1 MB, data loaded,

'left_hand': 60

'right_hand': 60>

warn(f"warnEpochs {epochs}")

/home/runner/work/moabb/moabb/moabb/datasets/preprocessing.py:279: UserWarning: warnEpochs <Epochs | 120 events (all good), 3 – 7.5 s (baseline off), ~3.1 MB, data loaded,

'left_hand': 60

'right_hand': 60>

warn(f"warnEpochs {epochs}")

/home/runner/work/moabb/moabb/moabb/datasets/preprocessing.py:279: UserWarning: warnEpochs <Epochs | 160 events (all good), 3 – 7.5 s (baseline off), ~4.1 MB, data loaded,

'left_hand': 80

'right_hand': 80>

warn(f"warnEpochs {epochs}")

/home/runner/work/moabb/moabb/moabb/datasets/preprocessing.py:279: UserWarning: warnEpochs <Epochs | 120 events (all good), 3 – 7.5 s (baseline off), ~3.1 MB, data loaded,

'left_hand': 60

'right_hand': 60>

warn(f"warnEpochs {epochs}")

/home/runner/work/moabb/moabb/moabb/datasets/preprocessing.py:279: UserWarning: warnEpochs <Epochs | 160 events (all good), 3 – 7.5 s (baseline off), ~4.1 MB, data loaded,

'left_hand': 80

'right_hand': 80>

warn(f"warnEpochs {epochs}")

/home/runner/work/moabb/moabb/.venv/lib/python3.9/site-packages/braindecode/models/base.py:180: UserWarning: LogSoftmax final layer will be removed! Please adjust your loss function accordingly (e.g. CrossEntropyLoss)!

warnings.warn("LogSoftmax final layer will be removed! " +

epoch train_acc train_loss valid_acc valid_loss dur

------- ----------- ------------ ----------- ------------ ------

1 0.4464 1.0185 0.5000 1.1524 0.7555

2 0.4464 0.9894 0.4464 0.8213 0.7874

3 0.4821 0.9262 0.5089 0.7942 0.7878

4 0.5022 0.9367 0.5000 0.7740 0.7542

5 0.4933 0.9051 0.4821 0.7528 0.7783

/home/runner/work/moabb/moabb/.venv/lib/python3.9/site-packages/braindecode/models/base.py:180: UserWarning: LogSoftmax final layer will be removed! Please adjust your loss function accordingly (e.g. CrossEntropyLoss)!

warnings.warn("LogSoftmax final layer will be removed! " +

epoch train_acc train_loss valid_acc valid_loss dur

------- ----------- ------------ ----------- ------------ ------

1 0.5179 0.8223 0.5357 0.7277 0.7848

2 0.5446 0.8088 0.5089 0.7266 0.7792

3 0.5379 0.8037 0.5179 0.7532 0.7465

4 0.5737 0.7376 0.5000 0.7233 0.7775

5 0.5893 0.7414 0.5893 0.6935 0.7868

/home/runner/work/moabb/moabb/.venv/lib/python3.9/site-packages/braindecode/models/base.py:180: UserWarning: LogSoftmax final layer will be removed! Please adjust your loss function accordingly (e.g. CrossEntropyLoss)!

warnings.warn("LogSoftmax final layer will be removed! " +

epoch train_acc train_loss valid_acc valid_loss dur

------- ----------- ------------ ----------- ------------ ------

1 0.5182 0.9331 0.4808 1.4020 0.6821

2 0.5000 0.9396 0.5288 0.9328 0.6486

3 0.5026 0.8506 0.5000 0.8347 0.6767

4 0.5495 0.8173 0.4615 0.8196 0.6422

5 0.5365 0.8032 0.4808 0.8083 0.6723

/home/runner/work/moabb/moabb/.venv/lib/python3.9/site-packages/braindecode/models/base.py:180: UserWarning: LogSoftmax final layer will be removed! Please adjust your loss function accordingly (e.g. CrossEntropyLoss)!

warnings.warn("LogSoftmax final layer will be removed! " +

epoch train_acc train_loss valid_acc valid_loss dur

------- ----------- ------------ ----------- ------------ ------

1 0.5045 0.8913 0.5000 1.1910 0.7841

2 0.5491 0.8217 0.5000 0.7662 0.7542

3 0.5134 0.8495 0.5089 0.7758 0.7861

4 0.5379 0.8506 0.5089 0.7411 0.7915

5 0.5402 0.8245 0.5446 0.7243 0.7871

/home/runner/work/moabb/moabb/.venv/lib/python3.9/site-packages/braindecode/models/base.py:180: UserWarning: LogSoftmax final layer will be removed! Please adjust your loss function accordingly (e.g. CrossEntropyLoss)!

warnings.warn("LogSoftmax final layer will be removed! " +

epoch train_acc train_loss valid_acc valid_loss dur

------- ----------- ------------ ----------- ------------ ------

1 0.4948 0.9158 0.5000 1.7910 0.6496

2 0.4974 0.8917 0.4808 0.8633 0.6781

3 0.5182 0.8382 0.4712 0.7722 0.6714

4 0.5339 0.8021 0.5192 0.7762 0.6615

5 0.5026 0.8546 0.5385 0.7698 0.6729

epoch train_acc train_loss valid_acc valid_loss dur

------- ----------- ------------ ----------- ------------ ------

1 0.4978 0.7174 0.5357 0.6930 0.1924

2 0.4621 0.7250 0.5357 0.6930 0.1920

3 0.5179 0.7120 0.5268 0.6929 0.2198

4 0.5246 0.7090 0.5804 0.6929 0.1905

5 0.5335 0.6942 0.5804 0.6929 0.1907

6 0.4911 0.7287 0.5714 0.6928 0.1916

7 0.5312 0.7083 0.5625 0.6928 0.1905

8 0.4732 0.7385 0.5625 0.6927 0.2222

9 0.5312 0.6961 0.5714 0.6926 0.1965

10 0.5379 0.6966 0.5714 0.6926 0.1902

epoch train_acc train_loss valid_acc valid_loss dur

------- ----------- ------------ ----------- ------------ ------

1 0.4710 0.7273 0.5804 0.6922 0.1926

2 0.5000 0.7216 0.5714 0.6921 0.2217

3 0.5134 0.7185 0.5536 0.6920 0.1913

4 0.5446 0.7036 0.5536 0.6919 0.1900

5 0.4777 0.7256 0.5536 0.6918 0.1949

6 0.4955 0.7238 0.5536 0.6917 0.1909

7 0.4799 0.7195 0.5536 0.6916 0.2139

8 0.5335 0.7113 0.5536 0.6914 0.2032

9 0.5357 0.6996 0.5536 0.6913 0.1909

10 0.5000 0.7096 0.5536 0.6912 0.1923

epoch train_acc train_loss valid_acc valid_loss dur

------- ----------- ------------ ----------- ------------ ------

1 0.5208 0.7214 0.5000 0.6936 0.2044

2 0.5026 0.7138 0.4615 0.6936 0.1806

3 0.4766 0.7324 0.4808 0.6936 0.1666

Stopping since valid_loss has not improved in the last 3 epochs.

epoch train_acc train_loss valid_acc valid_loss dur

------- ----------- ------------ ----------- ------------ ------

1 0.4821 0.7345 0.5982 0.6926 0.2276

2 0.4754 0.7320 0.5536 0.6926 0.1909

3 0.5335 0.7119 0.5446 0.6925 0.1918

4 0.4955 0.7158 0.5268 0.6925 0.1909

5 0.5290 0.6973 0.5089 0.6925 0.1918

6 0.4799 0.7251 0.5089 0.6924 0.2177

7 0.5089 0.7050 0.5179 0.6924 0.1995

8 0.5335 0.6999 0.5179 0.6923 0.1900

9 0.5067 0.7165 0.5268 0.6923 0.1909

10 0.4933 0.7111 0.5268 0.6922 0.1924

epoch train_acc train_loss valid_acc valid_loss dur

------- ----------- ------------ ----------- ------------ ------

1 0.4661 0.7408 0.5481 0.6927 0.1702

2 0.5000 0.7198 0.5962 0.6926 0.1655

3 0.5052 0.7395 0.5192 0.6926 0.1658

4 0.4870 0.7254 0.5000 0.6926 0.1671

5 0.5208 0.7183 0.4904 0.6925 0.1638

6 0.5182 0.7054 0.5096 0.6925 0.1910

7 0.5521 0.7176 0.5000 0.6925 0.1697

8 0.5052 0.7164 0.5000 0.6924 0.1649

9 0.5339 0.7125 0.5000 0.6924 0.1645

10 0.5104 0.7055 0.5000 0.6924 0.1659

/home/runner/work/moabb/moabb/.venv/lib/python3.9/site-packages/braindecode/models/base.py:180: UserWarning: LogSoftmax final layer will be removed! Please adjust your loss function accordingly (e.g. CrossEntropyLoss)!

warnings.warn("LogSoftmax final layer will be removed! " +

epoch train_acc train_loss valid_acc valid_loss dur

------- ----------- ------------ ----------- ------------ ------

1 0.5179 0.9645 0.4286 0.6977 1.0799

2 0.4866 0.9577 0.4286 0.6977 1.1075

3 0.4732 1.0036 0.4018 0.6979 1.0711

Stopping since valid_loss has not improved in the last 3 epochs.

/home/runner/work/moabb/moabb/.venv/lib/python3.9/site-packages/braindecode/models/base.py:180: UserWarning: LogSoftmax final layer will be removed! Please adjust your loss function accordingly (e.g. CrossEntropyLoss)!

warnings.warn("LogSoftmax final layer will be removed! " +

epoch train_acc train_loss valid_acc valid_loss dur

------- ----------- ------------ ----------- ------------ ------

1 0.4955 0.9767 0.5536 0.6893 1.0879

2 0.5067 0.9224 0.5268 0.6897 1.0796

3 0.5402 0.8863 0.5268 0.6905 1.0873

Stopping since valid_loss has not improved in the last 3 epochs.

/home/runner/work/moabb/moabb/.venv/lib/python3.9/site-packages/braindecode/models/base.py:180: UserWarning: LogSoftmax final layer will be removed! Please adjust your loss function accordingly (e.g. CrossEntropyLoss)!

warnings.warn("LogSoftmax final layer will be removed! " +

epoch train_acc train_loss valid_acc valid_loss dur

------- ----------- ------------ ----------- ------------ ------

1 0.4922 0.9560 0.4808 0.6957 0.9414

2 0.5182 0.9880 0.4712 0.6948 0.9331

3 0.4792 1.0325 0.4712 0.6952 0.9457

4 0.4870 1.0289 0.5000 0.6965 0.9550

Stopping since valid_loss has not improved in the last 3 epochs.

/home/runner/work/moabb/moabb/.venv/lib/python3.9/site-packages/braindecode/models/base.py:180: UserWarning: LogSoftmax final layer will be removed! Please adjust your loss function accordingly (e.g. CrossEntropyLoss)!

warnings.warn("LogSoftmax final layer will be removed! " +

epoch train_acc train_loss valid_acc valid_loss dur

------- ----------- ------------ ----------- ------------ ------

1 0.4888 0.9229 0.5268 0.6930 1.0956

2 0.4643 0.9981 0.5179 0.6931 1.0822

3 0.4754 0.9840 0.4911 0.6932 1.0861

Stopping since valid_loss has not improved in the last 3 epochs.

/home/runner/work/moabb/moabb/.venv/lib/python3.9/site-packages/braindecode/models/base.py:180: UserWarning: LogSoftmax final layer will be removed! Please adjust your loss function accordingly (e.g. CrossEntropyLoss)!

warnings.warn("LogSoftmax final layer will be removed! " +

epoch train_acc train_loss valid_acc valid_loss dur

------- ----------- ------------ ----------- ------------ ------

1 0.5286 0.9386 0.5000 0.6957 0.9302

2 0.5312 0.9320 0.4712 0.6970 0.9186

3 0.4661 1.0454 0.4904 0.6987 0.9371

Stopping since valid_loss has not improved in the last 3 epochs.

BNCI2014-004-CrossSession: 100%|##########| 2/2 [02:20<00:00, 68.40s/it]

BNCI2014-004-CrossSession: 100%|##########| 2/2 [02:20<00:00, 70.22s/it]

dataset evaluation pipeline avg score

0 BNCI2014-001 CrossSession braindecode_EEGNetv4_resample 0.560812

1 BNCI2014-001 CrossSession braindecode_ShallowFBCSPNet 0.512201

2 BNCI2014-001 CrossSession braindecode_EEGInception 0.484857

3 BNCI2014-004 CrossSession braindecode_EEGNetv4_resample 0.518677

4 BNCI2014-004 CrossSession braindecode_ShallowFBCSPNet 0.544734

5 BNCI2014-004 CrossSession braindecode_EEGInception 0.564226

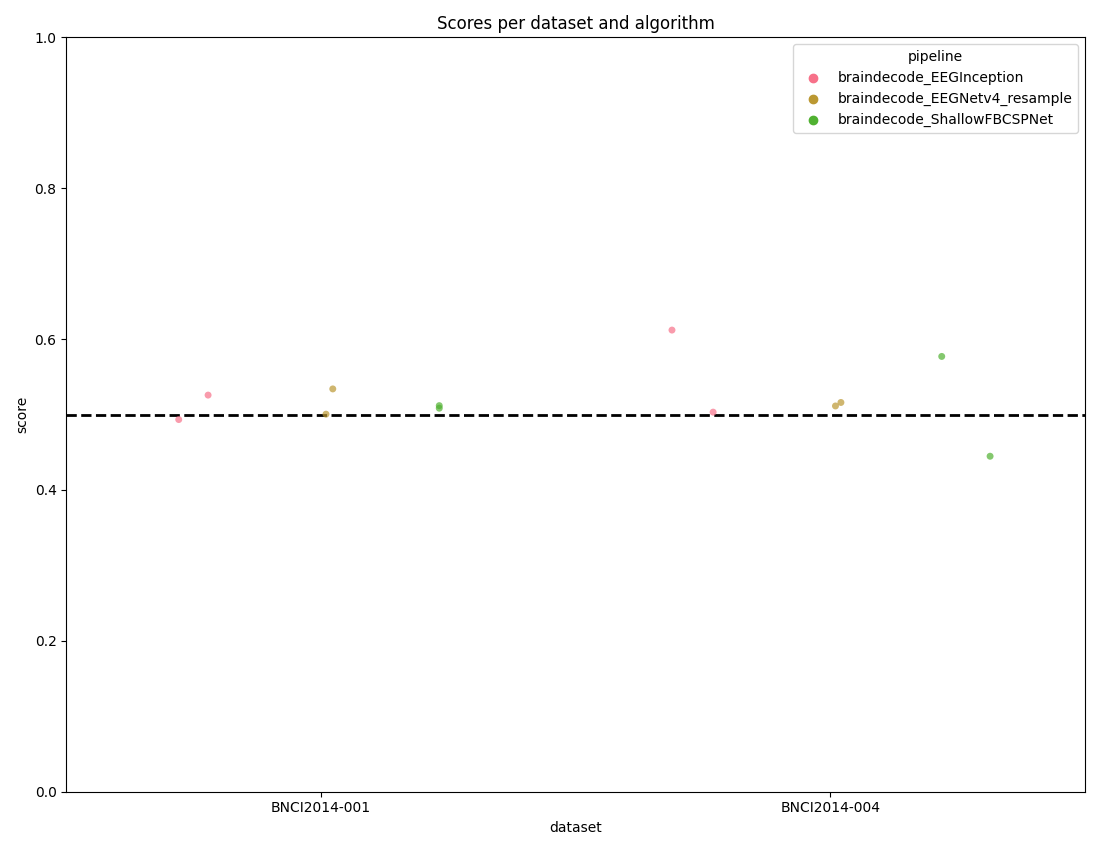

The deep learning architectures implemented in MOABB using Braindecode are:

Benchmark prints a summary of the results. Detailed results are saved in a

pandas dataframe, and can be used to generate figures. The analysis & figures

are saved in the benchmark folder.

score_plot(results)

plt.show()

/home/runner/work/moabb/moabb/moabb/analysis/plotting.py:70: UserWarning: The palette list has more values (6) than needed (3), which may not be intended.

sea.stripplot(

References#

- 1(1,2)

Schirrmeister, R. T., Springenberg, J. T., Fiederer, L. D. J., Glasstetter, M., Eggensperger, K., Tangermann, M., … & Ball, T. (2017). Deep learning with convolutional neural networks for EEG decoding and visualization. Human brain mapping, 38(11), 5391-5420.

- 2

Lawhern, V. J., Solon, A. J., Waytowich, N. R., Gordon, S. M., Hung, C. P., & Lance, B. J. (2018). EEGNet: a compact convolutional neural network for EEG-based brain-computer interfaces. Journal of neural engineering, 15(5), 056013.

- 3

Santamaria-Vazquez, E., Martinez-Cagigal, V., Vaquerizo-Villar, F., & Hornero, R. (2020). EEG-inception: A novel deep convolutional neural network for assistive ERP-based brain-computer interfaces. IEEE Transactions on Neural Systems and Rehabilitation Engineering

Total running time of the script: ( 3 minutes 0.679 seconds)

Estimated memory usage: 667 MB