Note

Click here to download the full example code

Examples of how to use MOABB to benchmark pipelines.#

Benchmarking with MOABB#

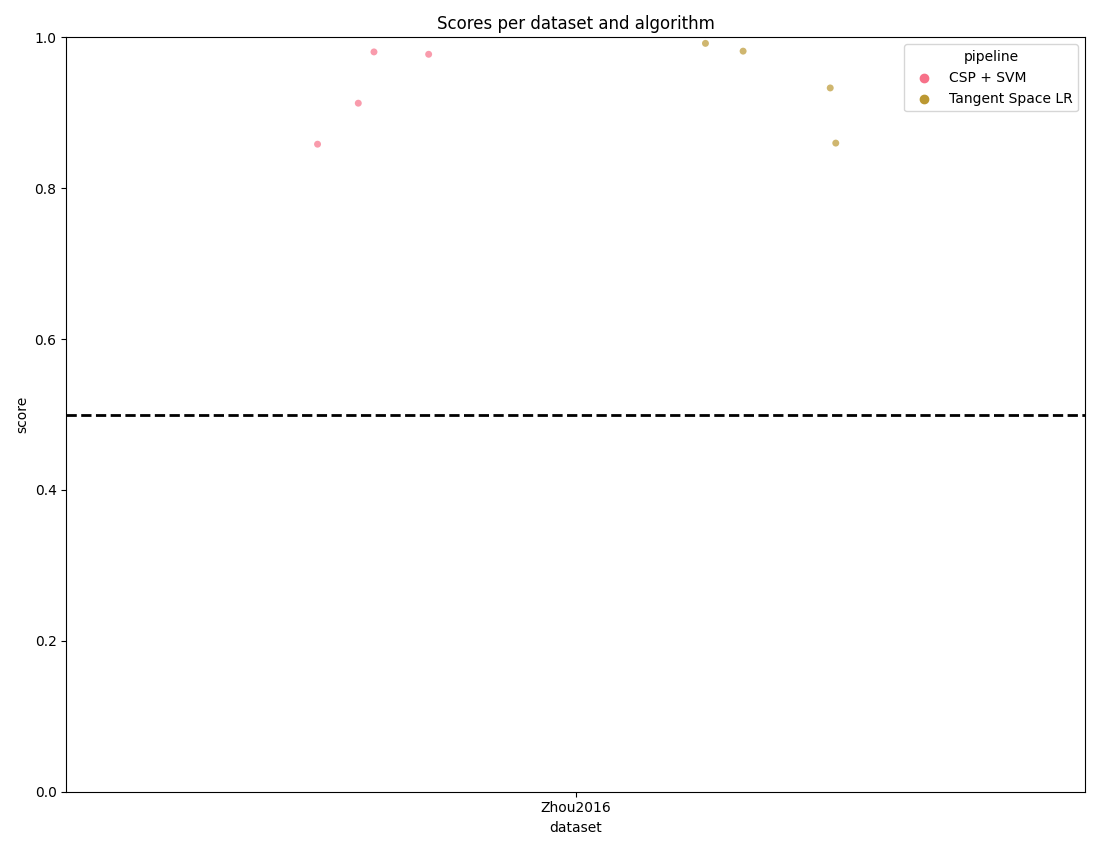

This example shows how to use MOABB to benchmark a set of pipelines on all available datasets. For this example, we will use only one dataset to keep the computation time low, but this benchmark is designed to easily scale to many datasets.

# Authors: Sylvain Chevallier <sylvain.chevallier@universite-paris-saclay.fr>

#

# License: BSD (3-clause)

import matplotlib.pyplot as plt

from moabb import benchmark, set_log_level

from moabb.analysis.plotting import score_plot

from moabb.paradigms import LeftRightImagery

set_log_level("info")

Loading the pipelines#

The ML pipelines used in benchmark are defined in YAML files, following a simple format. It simplifies sharing and reusing pipelines across benchmarks, reproducing state-of-the-art results.

MOABB comes with complete list of pipelines that cover most of the successful approaches in the literature. You can find them in the pipelines folder. For this example, we will use a folder with only 2 pipelines, to keep the computation time low.

This is an example of a pipeline defined in YAML, defining on which paradigms it can be used, the original publication, and the steps to perform using a scikit-learn API. In this case, a CSP + SVM pipeline, the covariance are estimated to compute a CSP filter and then a linear SVM is trained on the CSP filtered signals.

name: CSP + SVM

paradigms:

- LeftRightImagery

citations:

- https://doi.org/10.1007/BF01129656

- https://doi.org/10.1109/MSP.2008.4408441

pipeline:

- name: Covariances

from: pyriemann.estimation

parameters:

estimator: oas

- name: CSP

from: pyriemann.spatialfilters

parameters:

nfilter: 6

- name: SVC

from: sklearn.svm

parameters:

kernel: "linear"

The sample_pipelines folder contains a second pipeline, a logistic regression

performed in the tangent space using Riemannian geometry.

Selecting the datasets (optional)#

If you want to limit your benchmark on a subset of datasets, you can use the

include_datasets and exclude_datasets arguments. You will need either

to provide the dataset’s object, or a the dataset’s code. To get the list of

available dataset’s code for a given paradigm, you can use the following command:

paradigm = LeftRightImagery()

for d in paradigm.datasets:

print(d.code)

BNCI2014-001

BNCI2014-004

Cho2017

GrosseWentrup2009

Lee2019-MI

Liu2024

PhysionetMotorImagery

Schirrmeister2017

Shin2017A

Stieger2021

Weibo2014

Zhou2016

In this example, we will use only the last dataset, ‘Zhou 2016’.

Running the benchmark#

The benchmark is run using the benchmark function. You need to specify the

folder containing the pipelines to use, the kind of evaluation and the paradigm

to use. By default, the benchmark will use all available datasets for all

paradigms listed in the pipelines. You could restrict to specific evaluation and

paradigm using the evaluations and paradigms arguments.

To save computation time, the results are cached. If you want to re-run the

benchmark, you can set the overwrite argument to True.

It is possible to indicate the folder to cache the results and the one to save

the analysis & figures. By default, the results are saved in the results

folder, and the analysis & figures are saved in the benchmark folder.

results = benchmark(

pipelines="./sample_pipelines/",

evaluations=["WithinSession"],

paradigms=["LeftRightImagery"],

include_datasets=["Zhou2016"],

results="./results/",

overwrite=False,

plot=False,

output="./benchmark/",

)

Zhou2016-WithinSession: 0%| | 0/4 [00:00<?, ?it/s]MNE_DATA is not already configured. It will be set to default location in the home directory - /home/runner/mne_data

All datasets will be downloaded to this location, if anything is already downloaded, please move manually to this location

/home/runner/work/moabb/moabb/moabb/datasets/preprocessing.py:278: UserWarning: warnEpochs <Epochs | 60 events (all good), 0 – 5 s (baseline off), ~8.0 MB, data loaded,

'left_hand': 30

'right_hand': 30>

warn(f"warnEpochs {epochs}")

/home/runner/work/moabb/moabb/moabb/datasets/preprocessing.py:278: UserWarning: warnEpochs <Epochs | 59 events (all good), 0 – 5 s (baseline off), ~7.9 MB, data loaded,

'left_hand': 30

'right_hand': 29>

warn(f"warnEpochs {epochs}")

/home/runner/work/moabb/moabb/moabb/datasets/preprocessing.py:278: UserWarning: warnEpochs <Epochs | 50 events (all good), 0 – 5 s (baseline off), ~6.7 MB, data loaded,

'left_hand': 25

'right_hand': 25>

warn(f"warnEpochs {epochs}")

/home/runner/work/moabb/moabb/moabb/datasets/preprocessing.py:278: UserWarning: warnEpochs <Epochs | 50 events (all good), 0 – 5 s (baseline off), ~6.7 MB, data loaded,

'left_hand': 25

'right_hand': 25>

warn(f"warnEpochs {epochs}")

/home/runner/work/moabb/moabb/moabb/datasets/preprocessing.py:278: UserWarning: warnEpochs <Epochs | 50 events (all good), 0 – 5 s (baseline off), ~6.7 MB, data loaded,

'left_hand': 25

'right_hand': 25>

warn(f"warnEpochs {epochs}")

/home/runner/work/moabb/moabb/moabb/datasets/preprocessing.py:278: UserWarning: warnEpochs <Epochs | 50 events (all good), 0 – 5 s (baseline off), ~6.7 MB, data loaded,

'left_hand': 25

'right_hand': 25>

warn(f"warnEpochs {epochs}")

Zhou2016-WithinSession: 25%|##5 | 1/4 [00:06<00:19, 6.35s/it]/home/runner/work/moabb/moabb/moabb/datasets/preprocessing.py:278: UserWarning: warnEpochs <Epochs | 50 events (all good), 0 – 5 s (baseline off), ~6.7 MB, data loaded,

'left_hand': 25

'right_hand': 25>

warn(f"warnEpochs {epochs}")

/home/runner/work/moabb/moabb/moabb/datasets/preprocessing.py:278: UserWarning: warnEpochs <Epochs | 50 events (all good), 0 – 5 s (baseline off), ~6.7 MB, data loaded,

'left_hand': 25

'right_hand': 25>

warn(f"warnEpochs {epochs}")

/home/runner/work/moabb/moabb/moabb/datasets/preprocessing.py:278: UserWarning: warnEpochs <Epochs | 50 events (all good), 0 – 5 s (baseline off), ~6.7 MB, data loaded,

'left_hand': 25

'right_hand': 25>

warn(f"warnEpochs {epochs}")

/home/runner/work/moabb/moabb/moabb/datasets/preprocessing.py:278: UserWarning: warnEpochs <Epochs | 40 events (all good), 0 – 5 s (baseline off), ~5.4 MB, data loaded,

'left_hand': 20

'right_hand': 20>

warn(f"warnEpochs {epochs}")

/home/runner/work/moabb/moabb/moabb/datasets/preprocessing.py:278: UserWarning: warnEpochs <Epochs | 50 events (all good), 0 – 5 s (baseline off), ~6.7 MB, data loaded,

'left_hand': 25

'right_hand': 25>

warn(f"warnEpochs {epochs}")

/home/runner/work/moabb/moabb/moabb/datasets/preprocessing.py:278: UserWarning: warnEpochs <Epochs | 50 events (all good), 0 – 5 s (baseline off), ~6.7 MB, data loaded,

'left_hand': 25

'right_hand': 25>

warn(f"warnEpochs {epochs}")

Zhou2016-WithinSession: 50%|##### | 2/4 [00:10<00:10, 5.11s/it]/home/runner/work/moabb/moabb/moabb/datasets/preprocessing.py:278: UserWarning: warnEpochs <Epochs | 50 events (all good), 0 – 5 s (baseline off), ~6.7 MB, data loaded,

'left_hand': 25

'right_hand': 25>

warn(f"warnEpochs {epochs}")

/home/runner/work/moabb/moabb/moabb/datasets/preprocessing.py:278: UserWarning: warnEpochs <Epochs | 50 events (all good), 0 – 5 s (baseline off), ~6.7 MB, data loaded,

'left_hand': 25

'right_hand': 25>

warn(f"warnEpochs {epochs}")

/home/runner/work/moabb/moabb/moabb/datasets/preprocessing.py:278: UserWarning: warnEpochs <Epochs | 50 events (all good), 0 – 5 s (baseline off), ~6.7 MB, data loaded,

'left_hand': 25

'right_hand': 25>

warn(f"warnEpochs {epochs}")

/home/runner/work/moabb/moabb/moabb/datasets/preprocessing.py:278: UserWarning: warnEpochs <Epochs | 50 events (all good), 0 – 5 s (baseline off), ~6.7 MB, data loaded,

'left_hand': 25

'right_hand': 25>

warn(f"warnEpochs {epochs}")

/home/runner/work/moabb/moabb/moabb/datasets/preprocessing.py:278: UserWarning: warnEpochs <Epochs | 50 events (all good), 0 – 5 s (baseline off), ~6.7 MB, data loaded,

'left_hand': 25

'right_hand': 25>

warn(f"warnEpochs {epochs}")

/home/runner/work/moabb/moabb/moabb/datasets/preprocessing.py:278: UserWarning: warnEpochs <Epochs | 50 events (all good), 0 – 5 s (baseline off), ~6.7 MB, data loaded,

'left_hand': 25

'right_hand': 25>

warn(f"warnEpochs {epochs}")

Zhou2016-WithinSession: 75%|#######5 | 3/4 [00:15<00:04, 4.85s/it]/home/runner/work/moabb/moabb/moabb/datasets/preprocessing.py:278: UserWarning: warnEpochs <Epochs | 40 events (all good), 0 – 5 s (baseline off), ~5.4 MB, data loaded,

'left_hand': 20

'right_hand': 20>

warn(f"warnEpochs {epochs}")

/home/runner/work/moabb/moabb/moabb/datasets/preprocessing.py:278: UserWarning: warnEpochs <Epochs | 50 events (all good), 0 – 5 s (baseline off), ~6.7 MB, data loaded,

'left_hand': 25

'right_hand': 25>

warn(f"warnEpochs {epochs}")

/home/runner/work/moabb/moabb/moabb/datasets/preprocessing.py:278: UserWarning: warnEpochs <Epochs | 50 events (all good), 0 – 5 s (baseline off), ~6.7 MB, data loaded,

'left_hand': 25

'right_hand': 25>

warn(f"warnEpochs {epochs}")

/home/runner/work/moabb/moabb/moabb/datasets/preprocessing.py:278: UserWarning: warnEpochs <Epochs | 50 events (all good), 0 – 5 s (baseline off), ~6.7 MB, data loaded,

'left_hand': 25

'right_hand': 25>

warn(f"warnEpochs {epochs}")

/home/runner/work/moabb/moabb/moabb/datasets/preprocessing.py:278: UserWarning: warnEpochs <Epochs | 50 events (all good), 0 – 5 s (baseline off), ~6.7 MB, data loaded,

'left_hand': 25

'right_hand': 25>

warn(f"warnEpochs {epochs}")

/home/runner/work/moabb/moabb/moabb/datasets/preprocessing.py:278: UserWarning: warnEpochs <Epochs | 50 events (all good), 0 – 5 s (baseline off), ~6.7 MB, data loaded,

'left_hand': 25

'right_hand': 25>

warn(f"warnEpochs {epochs}")

Zhou2016-WithinSession: 100%|##########| 4/4 [00:19<00:00, 4.62s/it]

Zhou2016-WithinSession: 100%|##########| 4/4 [00:19<00:00, 4.85s/it]

dataset evaluation pipeline avg score

0 Zhou2016 WithinSession CSP + SVM 0.932315

1 Zhou2016 WithinSession Tangent Space LR 0.941601

Benchmark prints a summary of the results. Detailed results are saved in a

pandas dataframe, and can be used to generate figures. The analysis & figures

are saved in the benchmark folder.

score_plot(results)

plt.show()

/home/runner/work/moabb/moabb/moabb/analysis/plotting.py:70: UserWarning: The palette list has more values (6) than needed (2), which may not be intended.

sea.stripplot(

Total running time of the script: ( 0 minutes 20.488 seconds)

Estimated memory usage: 351 MB