moabb.datasets.Thielen2021#

- class moabb.datasets.Thielen2021(subjects=None, sessions=None)[source]#

c-VEP dataset from Thielen et al. (2021)

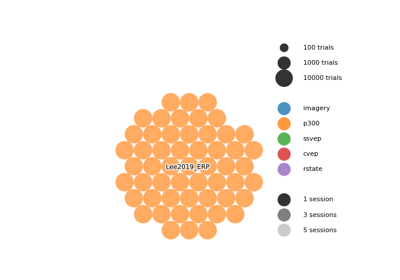

Dataset summary

#Subj

30

#Chan

8

#Trials / class

5

Trials length

31.5 s

Freq

512 Hz

#Sessions

1

#Trial classes

20

#Epochs classes

2

#Epochs / class

18900 NT / 18900 T

Codes

Gold codes

Presentation rate

60 Hz

Participants

Population: healthy

Age: 40.5 (range: 19-62) years

Equipment

Amplifier: Biosemi ActiveTwo

Electrodes: sintered Ag/AgCl active electrodes

Montage: 10-20

Reference: Car

Preprocessing

Data state: preprocessed

Bandpass filter: 2-30 Hz

Steps: high-pass filter, low-pass filter, downsampling

Re-reference: car

Data Access

DOI: 10.1088/1741-2552/abecef

Data URL: https://doi.org/10.34973/9txv-z787

Repository: Radboud

Experimental Protocol

Paradigm: cvep

Feedback: none

Stimulus: visual

Found an issue with this dataset?

If you encounter any problems with this dataset (missing files, incorrect metadata, loading errors, etc.), please let us know!

Dataset [1] from the study on zero-training c-VEP [2].

Dataset description

EEG recordings were acquired at a sampling rate of 512 Hz, employing 8 Ag/AgCl electrodes. The Biosemi ActiveTwo EEG amplifier was utilized during the experiment. The electrode array consisted of Fz, T7, O1, POz, Oz, Iz, O2, and T8, connected as EXG channels. This is a custom electrode montage as optimized in a previous study for c-VEP, see [3].

During the experimental sessions, participants engaged in passive operation (i.e., without feedback) of a 4 x 5 visual speller brain-computer interface (BCI) comprising 20 distinct classes. Each cell of the symbol grid underwent luminance modulation at full contrast, accomplished through pseudo-random noise-codes derived from a collection of modulated Gold codes. These codes are binary, have a balanced distribution of ones and zeros, and adhere to a limited run-length pattern (maximum run-length of 2 bits). The codes were presented at a presentation rate of 60 Hz. As one cycle of these modulated Gold codes contains 126 bits, the duration of one cycle is 2.1 seconds.

For each of the five blocks, a trial started with a cueing phase, during which the target symbol was highlighted in a green hue for a duration of 1 second. Following this, participants maintained their gaze fixated on the target symbol while all symbols flashed in accordance with their respective pseudo-random noise-codes for a duration of 31.5 seconds (i.e., 15 code cycles). Each block encompassed 20 trials, presented in a randomized sequence, thereby ensuring that each symbol was attended to once within the span of a block.

Note, here, we only load the offline data of this study and ignore the online phase.

References

[1]Thielen, J. (Jordy), Pieter Marsman, Jason Farquhar, Desain, P.W.M. (Peter) (2023): From full calibration to zero training for a code-modulated visual evoked potentials brain computer interface. Version 3. Radboud University. (dataset). DOI: https://doi.org/10.34973/9txv-z787

[2]Thielen, J., Marsman, P., Farquhar, J., & Desain, P. (2021). From full calibration to zero training for a code-modulated visual evoked potentials for brain–computer interface. Journal of Neural Engineering, 18(5), 056007. DOI: https://doi.org/10.1088/1741-2552/abecef

[3]Ahmadi, S., Borhanazad, M., Tump, D., Farquhar, J., & Desain, P. (2019). Low channel count montages using sensor tying for VEP-based BCI. Journal of Neural Engineering, 16(6), 066038. DOI: https://doi.org/10.1088/1741-2552/ab4057

Notes

Added in version 0.6.0.